Phishing red flags are behavioral and technical cues that a message, call, site, or meeting is a social-engineering attempt. Look for urgent or unusual requests, suspicious or mismatched sender addresses, unexpected links/attachments, requests for sensitive data/payment details, fake login pages/websites, executive impersonation, and vishing.

Tech employees to pause-verify-report every time.

Prefer to listen? In this episode of All Things Human Risk Management, Hoxhunt CTO Pyry Åvist explains how agentic AI spear-phishing beat elite red teamers by 24% and what changes for your training program.

Top phishing red flags (email, text, phone & web)

We wrote this for enterprise training across email phishing, smishing, vishing, social media phishing, and fake websites. Use it to coach employees to protect personal information and sensitive data (credentials, credit card numbers, payment details) while avoiding malicious software and identity theft.

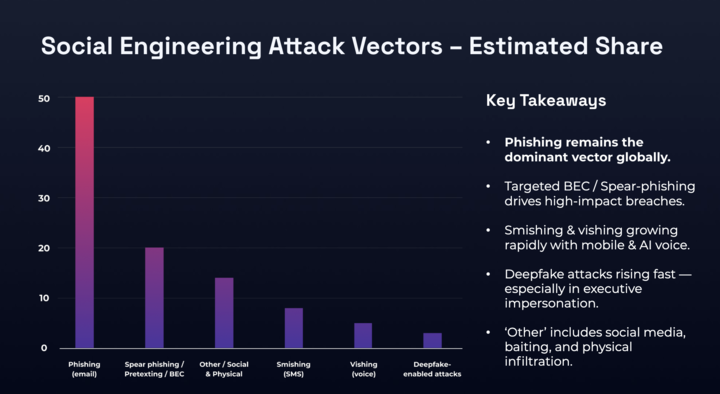

Phishing remains #1 threat

Phishing is still the front door: APWG logged 1,003,924 attacks in Q1 and 1,130,393 in Q2 2025 - the largest quarterly total since 2023, with QR-code (“quishing”) campaigns surging.

Below you can see the findings of our own svg phishing attachments mini-report.

The cost of a phishing attack

- Average cost of a breach: $4.44M worldwide (-9% YoY). U.S: $10.22M. (IBM)

- People remain the lever: The “human element” is involved in 60% of breaches (Verizon DBIR 2025).

- Market impact: Public companies average a -7.5% stock drop and $5.4B mean market-cap loss post-breach (HBR).

Effective training can move the needle

The good news? IBM’s 2025 Cost of a Data Breach pegs the average breach at $4.4M... but also shows $1.9M in savings for orgs with extensive security AI/automation.

The takeaway: Employee training and behaviors are both the biggest cost amplifier and mitigator of breaches. Which means teaching employee cybersecurity skills is absolutely vital.

Regardless of the tech you may have in place, the humans in your business will ultimately be your first line of defense against phishing. Recognizing the signs of a phishing attempt is crucial for protecting your organization. Employees at all levels must be equipped with the knowledge to identify and respond to these threats.

14 Phishing red flags your employees need to know (with examples)

1. Suspicious email addresses

Suspicious email addresses are one of the most common indicators of phishing attempts...

And also one of easiest to spot!

Cybercriminals will often craft email addresses that look like legitimate ones, but there are a few giveaways to watch out for.

Misspelled domains: attackers may use domain names that are near-identical to legitimate ones - changing just a single letter or adding a number.

Unfamiliar sender: Phishing emails will often come from unknown or unfamiliar email addresses.

Mismatched sender and domain names: Display names will often look legitimate, but the sender's email address doesn't always match the company's domain name.

Generic domain extensions: Phishing emails may come from generic or public domain extensions (e.g. @gmail.com instead of a corporate domain like @company.com).

Fake forwarded email: Some phishing attempts mimic forwarded emails with fake email chains to make the communication seem legitimate.

Best practices for employees

Verify the sender: If an email appears to come from a known contact, employees can verify by reaching out to them using an established phone number or email address (just make sure its not the one in the suspicious email).

Check the domain: Encourage employees to look closely at sender's email addresses for misspellings or inconsistencies in the domain name.

2. Urgent or unusual requests

Phishing attacks will often use a combination of urgency and unusual requests to trick recipients into taking action without thoroughly verifying the email's legitimacy.

Attackers might tell employees that their account os being locked or that it has (ironically) even been hacked.

The more panicked someone is, the more likely they are to miss anything suspicious.

Sense of panic: Attackers aim to create a sense of urgency to induce panic and cloud judgment. This'll make targets more likely to take immediate action without proper scrutiny.

Request to bypass normal procedures: Urgent requests often push recipients to go around standard verification procedures to increase the likelihood of success.

Exploiting authority: Emails claiming to be from high-level executives with urgent demands are used to exploit recipient into quickly complying.

Uncommon requests: Phishing emails will often deviate from normal business processes or involve sensitive actions (e.g. wire transfers, sharing confidential information).

Best practices for employees

Pause and assess: Employees should always take a moment to assess the situation. Attackers rely on haste, so simply taking time to properly review unusual emails will significantly reduce the likelihood of a successful phishing attempt.

Check internal policies: If in doubt, employees can refer to your company’s policies regarding requests for sensitive actions to ensure the request aligns with standard procedures.

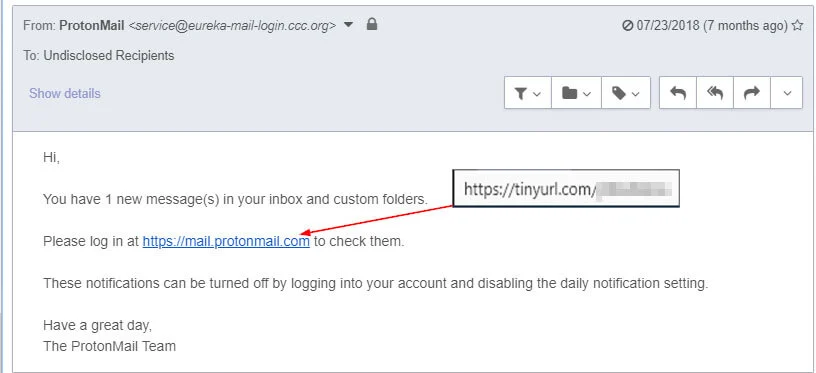

3. Suspicious links or attachments

Once an employee opens a malicious email, the attacker still needs them to take one more step in order to steal senstivie information or download malware onto their device.

This is often done via links.

Phishing emails will contain a link that either downloads malware or takes users to a website/form designed to capture certain information (personal details, bank deatils, login credentials etc).

Although these can sometimes be hard to spot if attackers have made the effort to disguise them, there are a few things that usually give suspicious links away.

Mismatch between displayed and actual URLs: The link text in the email may appear legitimate, but the actual URL (revealed when hovering over the link) might not match up.

Hyperlink without any additional contents: Always be suspicious of emails that only contain hyperlinks with no extra detail.

Misspellings: Malicious links will sometimes contain very minor variations or misspellings to look like legitimate sites (.com becomes .org or .info).

Shortened URLs: Some attackers will use short URL services to mask the real destination of their phishing link. Services like Bitly, TinyURL, Tinycc allow users to shorten any URL.

Non-secure websites: Links leading to websites that do not use HTTPS (no padlock symbol in the browser) can be a sign of phishing, as legitimate sites typically secure their connections. However, this is not always the case: 20% of phishing sites actually utilize HTTPS.

Uncommon attachments: Attachments with unusual file types (.iso, .js, .scr) instead of common ones like .pdf or .docx. may be grounds for suspicion.

Best practices for employees

Avoid clicking suspicious links: Employees should not click on links if they're unsure of their legitimacy.

Hover over URLs: By just hovering their mouse over links, employees will be able to view the actual URL. If it looks off or doesn't match the supposed sender’s domain, it shouldn't be clicked on.

4. Poor grammar and spelling

Legitimate businesses usually have standardized templates and proofreading processes that mean spelling and grammar errors are fairly rare.

Phishing attacks may be evolving in sophistication, but often still slip up with grammar mistakes and bad spelling.

This is mostly likely a result of hastily composed phishing emails designed to bypass spam filters and reach the recipient quickly.

Non-native language use: Many phishing emails originate from non-native speakers of the language they are written in, which means they're more likely to contain grammatical errors and awkward phrasing that make them easier to catch.

Intentional errors: Some emails intentionally include errors to target less vigilant recipients who might overlook these mistakes and fall for phishing scams.

Generic greetings: Legitimate companies and colleagues usually address you by your name or title. Phishing emails often use generic greetings like "Dear Customer," "Dear User," or "Hello," because the attackers do not have access to your personal details.

Verify with the sender: If an employee receives an email with suspicious mistakes, they can reach out to the person or organization directly using a known phone number to find out whether or not the email is legitimate.

Email template guidelines: Provide employees with guidelines and templates for professional email communication, making it easier to recognize deviations that might indicate phishing.

5. Requests for sensitive information

Legitimate organizations typically don't ask for sensitive information, like passwords, social security numbers, or credit card details - especially not through unsolicited emails.

These organizations will usually have any information you've submitted already and are unlikely to randomly reach out to request further details.

Urgency and pressure: Phishing emails often rely on creating urgency so that recipients feel pressured to provide sensitive information that they'd usually think twice about.

Odd time stamps: Emails sent at unusual times (e.g., late night or very early morning) could indicate an attempt to bypass standard business hours when vigilance might be lower.

Impersonation: Most people are unlikely to pass their details over to a random person. So, attackers will impersonate trusted entities, such as banks, colleagues, or government agencies, to trick recipients into divulging confidential information.

Unsecure channels: Legitimate organizations use secure methods (e.g. secure websites, encrypted communication) to request sensitive information. Email is generally not a secure method for sharing this kind of information.

Best practices for employees

Do not respond: Employees should never provide sensitive information via email before verifying that the sender and their request are legitimate.

Be wary of sending information via email: Since most organizations won't ask you to disclose sensitive information via email, employees should always be wary of these requests and report them immediately - they're unlikely to be the only ones who received an email.

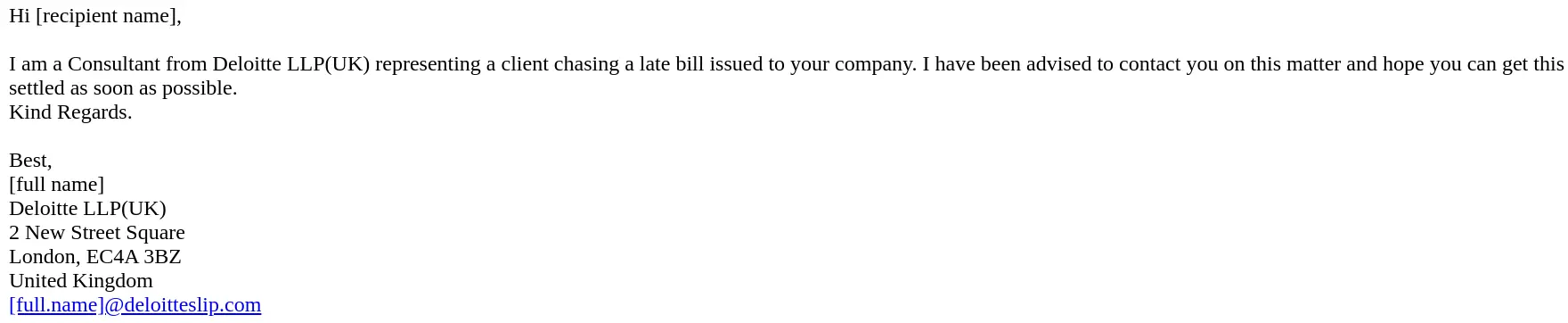

6. Unexpected invoice or payment requests

Unexpected invoice or payment requests are often used in phishing attacks to trick recipients into transferring money or giving away financial information.

Phishing emails posing as invoices issued by one company to another can be particularly tricky to spot.

They tend to use tactics like exploiting compromised email addresses to fly under the radar.

Lack of context: Genuine companies usually provide notice or context for invoices and payment requests. So, employees should be suspicious of any unsolicited requests.

Urgency and pressure: Legitimate requests generally won't create a sense of urgency or try to pressure recipients to make payments.

Unusual payment methods: Requests for payment via unconventional methods, such as wire transfers to unknown accounts, cryptocurrency, or gift cards.

Suspicious or unfamiliar details: The invoice may contain phishing red flags such as unfamiliar company names, incorrect details, or unusual formatting.

Best practices for employees

Review previous correspondence: Employees should check for previous correspondence or agreements related to the invoice or payment request.

Do not click Links or open attachments: Make sure employees know not to click on any links or open attachments in the email - this could lead to malware or phishing sites.

7. Unusual or 'off-looking' design

Phishing emails often have design elements that can appear unusual or "off-looking" compared to legitimate communications.

Poor formatting: Legitimate emails from reputable organizations usually have consistent and professional formatting. Poor layout, inconsistent font styles, sizes, and colors can indicate a phishing attempt.

Low-quality images and logos: Phishing messages may contain low-resolution images or logos that look distorted or pixelated. Authentic emails generally use high-quality images.

Mismatched branding: Official emails adhere to a company’s branding guidelines. If the email design deviates from what you usually see (e.g., different colors, logos, or font styles), it might be a phishing attempt.

Inconsistent contact information: Contact details that differ from the official channels you know or look odd (e.g., personal email addresses or phone numbers not associated with the organization).

Best practices for employees

Compare with previous emails: Employees can compare the design and layout with previous emails from the same sender - looking for inconsistencies or deviations from the norm.

Contact the sender: Encourage employees to use established contact information to confirm whether or not the email is genuine.

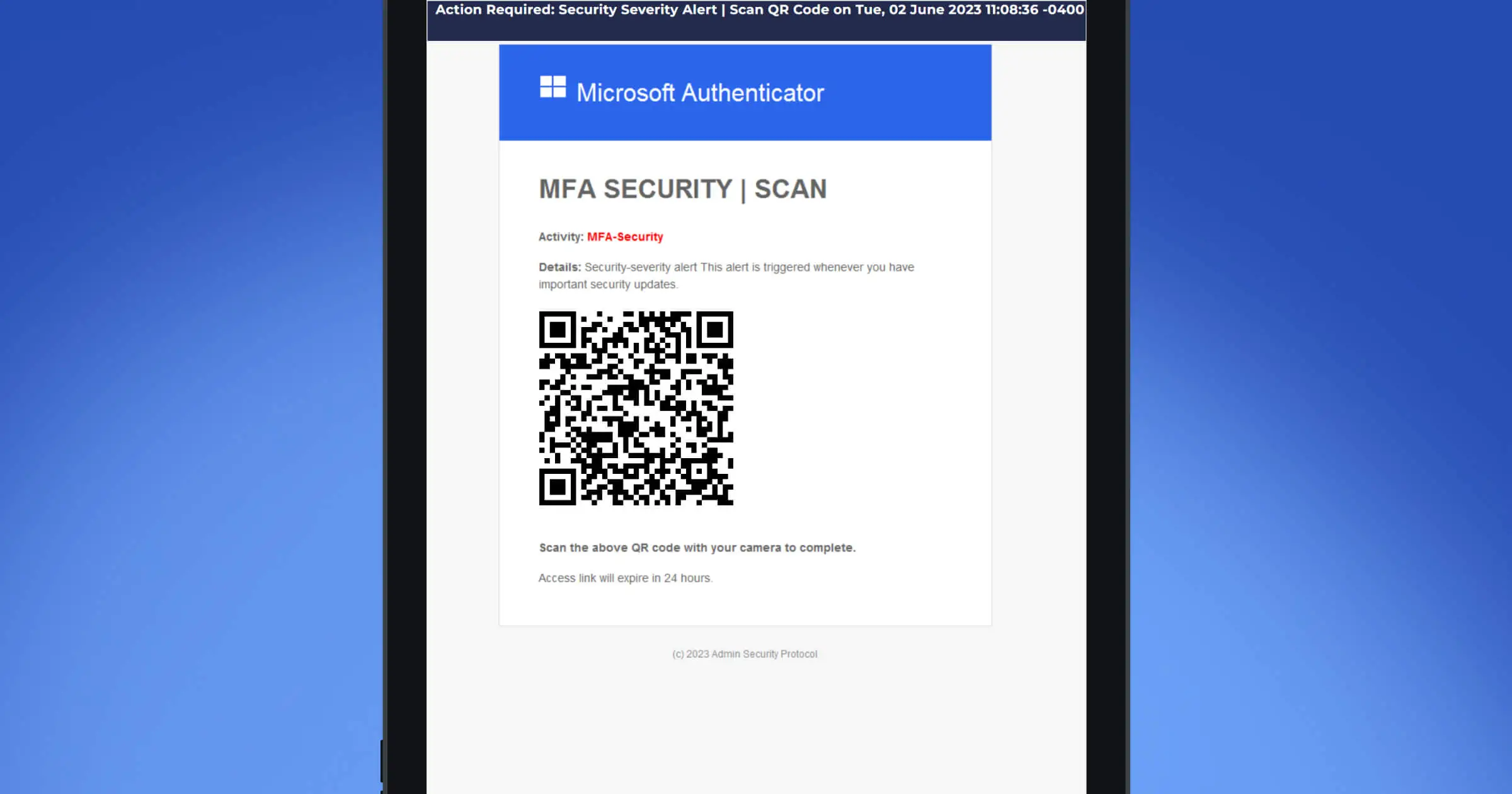

8. Activity alerts

You're probably used to seeing phishing emails attempting to steal your personal information...

But in this rather ironic twist, attackers are now sending emails warning you about phishing attacks.

This method works for attackers because it's so simple.

These fraudulent messages appear to be a friendly warning from a trusted source.

Some of these campaigns are actually clone phishing attacks - they use duplicates of legitimate emails from legitimate companies to increase credibility.

Account compromise notifications: Fraudulent emails often claim there has been suspicious activity on your account and request you to click a link to verify or reset your credentials.

Request for immediate action: These emails usually include a call to action, such as clicking a link, providing login credentials, or verifying account details to resolve the supposed issue.

Urgency and panic: Unusual activity alerts aim to cause panic so that recipients click on links quickly without thinking.

Best practices for employees

Review account activity: Employees can always log in to your account directly through the official website or app to check for any unusual activity.

Pay close attention to the sender’s email address: Employees may be able to spot anomalies in the sender's address (e.g. dhl.com vs dhI.com) When it comes to phishing, the devil’s often in the detail.

Do a little detective work before clicking: Employees should hover their mouse over the link to reveal the real URL it leads to (just make sure they don't actually click).

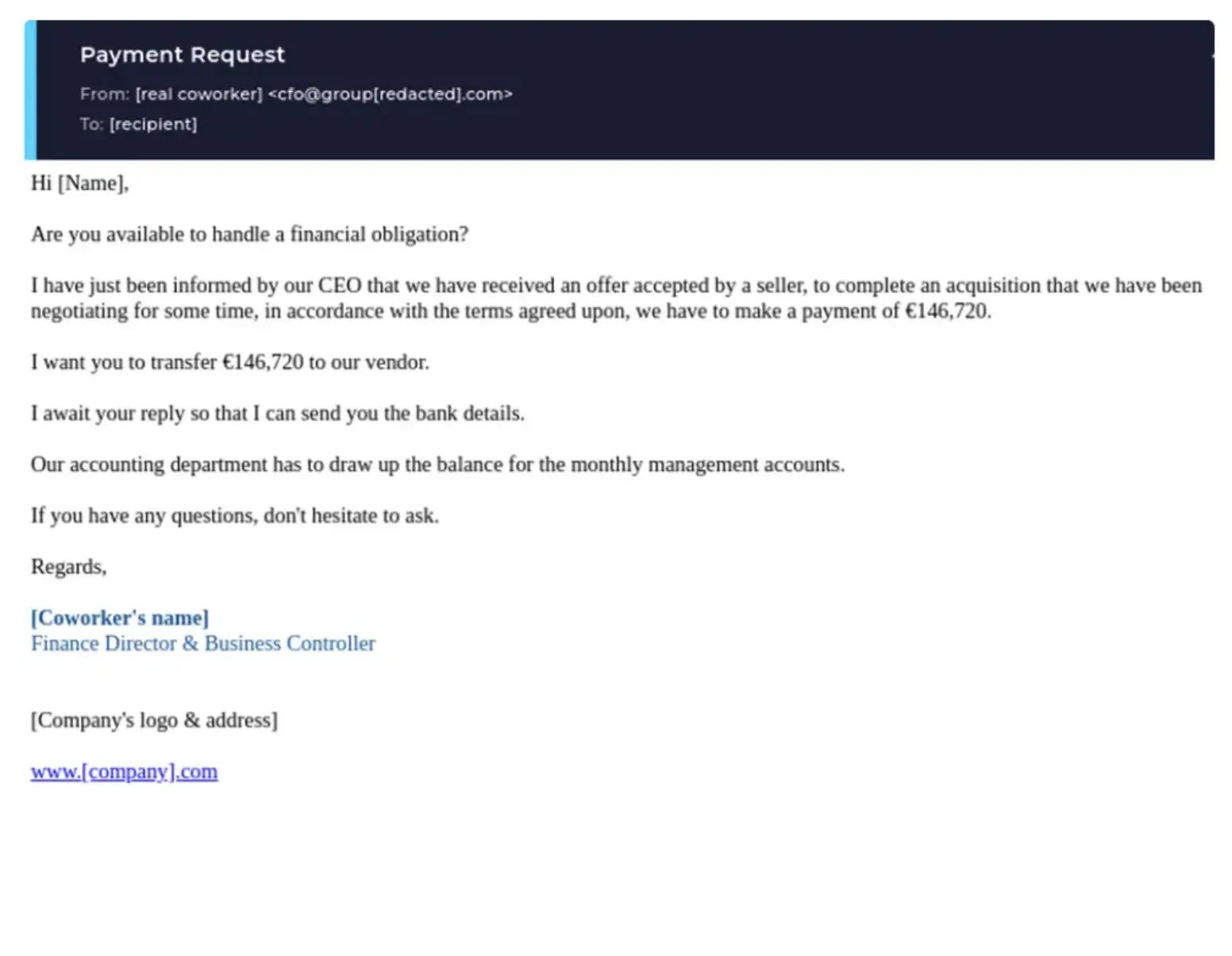

9. Requests from high-level executives

Emails that appear to come from high-level executives (often referred to as whaling phishing attacks) exploit the authority and urgency associated with executive requests.

Whaling phishing attacks are costing enterprises around $1.8 billion each year.

Attackers use sophisticated social engineering tactics and reconnaissance to create convincing, personalized messages that make these attacks particularly hard to spot.

Authority and urgency: Emails may use the name and email address of high-level executives (e.g., CEO, CFO) to create a sense of urgency and importance.

Generic or slightly off email addresses: The sender’s email address may look legitimate at first glance but might contain slight variations or misspellings that are easy to overlook.

Lack of context: Legitimate executive requests typically include context or background information. Phishing emails often lack this context or provide vague details.

Unusual time frames: Requests sent outside of normal business hours are a common phishing red flag.

Best practices for employees

Contact the executive directly: Employees should use a known contact method (e.g. phone call, official internal messaging system) to confirm the request with the executive.

Consult security team or IT department: If employees are unsure about the email’s authenticity, they should seek guidance from the IT/security team.

10. Unexpected calls

Phishing attempts are not limited to emails and can occur over the phone, often referred to as "vishing".

Vishing is a phone call where someone calls you and pretends to be an authority.

Attackers will use vishing to steal sensitive information, such as a verification code to gain access to your company's bank account.

Unsolicited nature: Unexpected calls from unknown or blocked numbers claiming to be from reputable organizations, such as banks or government agencies should generally be considered suspicious.

Requests for sensitive information: Phishing calls often involve requests for sensitive information, such as Social Security numbers, bank details, login credentials, or personal data.

Urgent or threatening tone: The caller may use urgency or threats to pressure you into providing information or taking action

Best practices for employees

Never give away sensitive information in phone calls: If employees are unsure that the request is legitimate, they should end the call, and look up the customer service number of the organization to verify if it was a real call.

Ask for contact details: Request the caller's name, department, and contact information. Do not use the contact details provided by the caller to verify their identity.

11. QR codes

Phishing isn’t just links - attackers embed QR images in emails, PDFs, posters, or packages to send users to fake login or payment pages. Scanning shifts you onto mobile, where small screens and autofill hide tell-tale signs. Employees should treat QR codes like links: verify the destination before you do anything.

Image-based links evade scanners: QR images can bypass some email link checks, making malicious destinations harder to detect.

Mobile-first traps: On phones, long URLs truncate and spoofed domains are easier to miss - perfect for credential harvesters and invoice scams.

Context mismatch: “Updated bank details” or “security verification” via QR is uncommon for most business processes; treat as high risk.

Best practices for employees

- Don’t scan unknown codes: If a QR arrives by email/PDF or on a poster/package, preview the URL first; if the domain isn’t the official one, close it and navigate manually from a bookmark.

- Never approve payments or MFA via QR: For vendor changes, invoices, or account resets, ignore the code, call a known number, and follow your client-verification.

12. Deepfake voice/video & live-meeting fraud

Attackers now impersonate executives or vendors on phone and video calls using AI voice cloning and face-swapping. These scams often happen in “urgent” ad-hoc meetings (Zoom/Teams/Meet) where you’re pressured to change payment details, share MFA codes, or grant remote access. Treat unexpected live requests as high risk.

Live pressure to bypass controls: Mid-call asks to “keep this confidential,” “approve now,” or “turn off content filtering” are classic tells.

Identity cues can be forged: Caller ID, profile photos, and even live video can be spoofed; light, lip-sync, and eye-blink artifacts might be subtle or absent.

Context mismatch: Requests that skip tickets/POs/approvals - or use personal accounts for payments - don’t match normal process.

Best practices for employees

Verify out-of-band. End the call and call back using a directory number or internal chat to confirm the request; require a ticket/approval per policy.

Never share secrets live. Do not disclose passwords, MFA codes, wallet seeds, or one-time links on calls; navigate manually to systems - don’t follow links dropped in chat.

Use the finance/vendor runbook. For bank-detail or invoice changes, follow client-verification steps and dual-control sign-offs; no exceptions for “urgent” asks.

Report and preserve evidence. Capture meeting details (time, participants, chat log/screenshots) and report to security for awareness training and follow-up.

Want to train users on the realistic deepfake attacks? Take a look at Hoxhunt's deepfake simulation training.

13. “Security/IT callback” scams

Attackers send emails, texts, or voicemails that tell you to call a number to “restore access,” “re-verify your account,” or “remove malware.” The goal is to move users into a live call where they can social-engineer credentials, one-time MFA codes, or get you them to install remote-access tools.

Unsolicited support prompts: Messages claim to be from your email provider, bank, or “Microsoft/Apple Support,” urging an immediate callback to fix an issue.

Number-in-message trap: The callback number routes to the attacker, who impersonates IT service providers or fraud teams and asks for account details.

Live escalation: On the call, they push for MFA codes, password resets, or to install “security” apps - sometimes asking you to bypass content filtering.

Best practices for employees

Never call numbers in the message: Use your internal helpdesk or vendor number from the directory/official site.

Do not share secrets on calls: No passwords, MFA codes, recovery links, or card/bank details - ever.

Refuse remote tools: Don’t install software or grant screen control unless initiated via approved IT ticketing and verified technician.

Verify through policy: For account lock or payment issues, follow your client-verification or fraud-handling runbook; loop in fraud departments when money is involved.

Report it: Capture the message, number, and any call details; report to security so the domain/number can be blocked.

14. MFA push-bombing / fatigue attacks

Attackers who already know (or guessed) a user's password will spam MFA prompts to thier phone/watch until they accidentally hit “Approve” or they convince them on a call/chat to “just accept to stop the notifications.” This turns a stolen password into full account access.

Relentless prompts: Multiple MFA requests in quick succession - often at night or during meetings - to nudge an accidental approval.

Social-engineering overlay: A “helpdesk” caller or chat DM may claim the prompts are a security check and tell you to approve.

Odd context: You aren’t logging in, or the prompt shows a location/device that doesn’t match your activity.

Best practices for employees

Deny every unexpected prompt: If you didn’t initiate a login, tap Deny/Report.

Change password immediately: and report the incident - assume your password was exposed.

Use number-matching / verification codes: If your app supports number matching or reason codes, turn it on; never use phone-call approvals or SMS if you can avoid it.

Re-authenticate safely: Open the app/site yourself (no links) and sign in from a bookmarked login page.

Preventing phishing attacks: best practices for businesses

Prevent phishing with a behavior-first program backed by modern controls: make reporting one-tap, run adaptive simulations across email/SMS/voice/meetings, add short micro-lessons, and plug training data into your incident response.

1) Make reporting one-tap and send it to the right place

Unify to a single reporting button and remove ambiguity. Give instant feedback to reinforce the habit and reduce SOC workload.

2) Run adaptive, role-aware training (short, frequent, personalized)

Ditch “set-and-forget.” Use individualized difficulty, gamification, and micro-training triggered by real events to sustain engagement and skills. Tie user-level insights to business impact.

3) Connect training to detection & incident response

Your report button isn’t just for awareness - link simulation and real-threat reporting to SOC outcomes. Faster reporting reduces damage; engagement → measurable risk reduction.

4) Train every channel - email, SMS, QR, phone, and live meetings

Simulate QR, smishing, vishing, and deepfake scenarios (Teams/Zoom/Meet) so people practice verification under pressure, not just inbox tells.

5) Teach premise-first verification for deepfakes

Don’t “hunt pixels.” Coach users to question the premise (unusual, urgent, or secret requests) and verify via a known channel.

6) Add clear callback policies for phone-based attacks

Give people air-cover to drop off a call and ring back via a directory number; agree simple pass-phrases where small-talk norms are low. Make reporting paths obvious.

7) Turn on layered email security (and keep it tuned)

Use advanced filtering/sandboxing; watch for quirks like URL rewriting that can skew tests. Keep content fresh to avoid “template fatigue.”

8) Implement multi-factor authentication (MFA)

MFA won’t stop every attack, but it meaningfully raises the bar for account takeover -especially when paired with reporting and user coaching.

9) Standardize reporting channels & reminders

Document where to report and what happens next so people aren’t stuck in the moment. Reinforce this in onboarding and refreshers.

10) Patch and harden the basics

Keep OS/apps/AV current to remove known footholds for payload-based phish.

11) Encourage “mark as suspicious” - don’t just delete

Teach everyone to mark/report suspicious email; those submissions improve filters and accelerate takedown.

Proof that behavior-first works (results you can reference)

Organizations using Hoxhunt typically see 20× lower failure, 90%+ engagement, and 75%+ detect rates - because training is personalized, frequent, and tied to real threats.

Beow you can see the findings of our Phishing Trends Report. The standard, pre-Hoxhunt SAT performance baseline is 7% Success, 20% Failure, and 80% Miss rates (the figures don’t add up to 100 due to separate data sets).

Many enterprise organizations with legacy SAT models often have stagnant success rates of about 10%, with limited visibility into real threat reporting and dwell time. These metrics all drastically improve once onboarded with Hoxhunt and steadily improve over time, demonstrating sustainable engagement and resilience.

- Behavior-based engagement soars by over 6x.

- Failure rates are cut in half. And that's just with the onboarding.

- Half of employees report a real threat 6 months into training. 2/3 of employees report a real threat within one year of beginning training.

What is Hoxhunt and how does our phishing training work?

Hoxhunt is behavior-first phishing training that turns every employee into an early-warning sensor. We deliver adaptive phishing simulations across email, SMS, QR, phone, social, and live meetings, pair them with one-tap reporting and instant coaching, and feed signals to your SOC - so people learn fast, report faster, and risk trends become measurable.

Built for real behavior change (not checkboxes)

- Adaptive & personal: Difficulty and content adjust by role, location, and skill.

- Multi-channel simulations: Email phishing, smishing, vishing, QR “quishing,” social DMs, and deepfake voice/video meeting prompts.

- One-tap reporting + instant coaching: A single report button routes to security and triggers bite-size feedback so good habits stick.

- SOC-ready signals: User reports (simulated and real) flow into triage to cut time-to-report and credential-submission rates.

- Always-on program: Frequent micro-lessons, localized content, and progressive rollouts keep engagement high without disrupting work.

Below you can see exactly what Hoxhunt simulations look like when they land in a user's inbox.

Phishing red flags FAQ

How should we phase a behavior-first rollout?

Start with email + reporting habit, then add SMS/QR/voice/meetings.

- Ship a single Report button and weekly micro-sims with instant coaching.

- Then tailor by role (finance/VIPs/support) and add smishing, vishing, QR.

- After 90+ days, use deepfake/meeting scenarios; pipe user signals into SOC.

Which metrics actually matter and what’s “good”?

Track reporting rate and time-to-report for both real and simulated threats. Push leadership updates on operational deltas, not vanity “completion” charts.

How do we wire the Report button to SOC without drowning in noise?

Auto-triage user reports (dedupe, reputation, sandbox), send verdicts back to mail filters, and close the loop with friendly feedback so the habit sticks. This link between sim and real-threat reporting is where measurable outcomes emerge.

What’s the right cadence for simulations and coaching?

Short, frequent beats long, annual modules. Practitioners favor monthly with drip campaigns that take minutes - keeping awareness fresh while reducing admin overhead.

How should we handle deepfake voice/video & live-meeting fraud?

Train premise-first verification: users won’t always spot pixel tells, so coach them to question unusual/urgent/secret requests and verify via a second channel (directory callback, ticket). Make scenarios that escalate to a video call (Teams/Meet/Zoom) where the ask lands.

What policy works for vishing without hurting CX?

Give people air-cover to drop off and call back using a directory number; encourage quick side-channel pings (Slack/Teams) and, where small-talk is uncommon, agree simple challenge/response passphrases for high-risk workflows.

How “real” should simulations be - any ethics guardrails?

Realism drives learning but no-tell “gotchas” can erode trust. Use realism to teach - not to trap - and pair with immediate coaching.

How do we avoid “security theater” and checkbox fatigue?

Shift from annual slideshows to behavior + outcomes; acknowledge compliance needs but optimize for reporting/TTR and culture. Practitioners warn that punitive programs backfire - recognize reporters and make security a partner.

How do we increase engagement without cringe?

Light gamification works when it rewards positive behavior (fast, accurate reports), not shaming. Some teams saw higher reporting after adding small public kudos or perks.

What’s a humane plan for “repeat offenders”?

Treat as a risk-based coaching problem: targeted 1:1 help and more frequent small drills; adjust permissions and require extra approvals/MFA for sensitive actions rather than blanket punishment.

- Subscribe to All Things Human Risk to get a monthly round up of our latest content

- Request a demo for a customized walkthrough of Hoxhunt